Repetition Action Counting Dataset

ShanghaiTech University

Github HomePage

[Download(OneDrive,extraction code:repcount)] [Download(BaiduNetDisk, extraction code: svip)]

Introduction

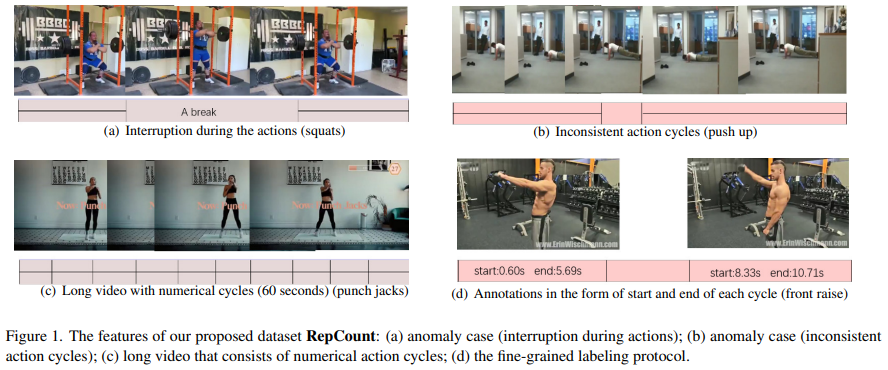

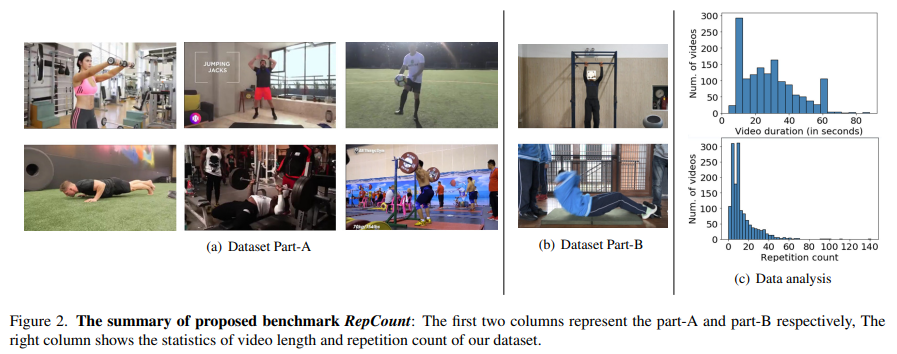

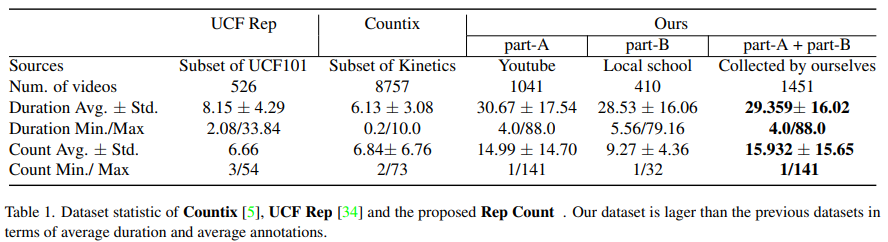

We introduce a novel repetition action counting dataset called RepCount that contains videos with significant variations in length and allows for multiple kinds of anomaly cases. These video data collaborate with fine-grained annotations that indicate the beginning and end of each action period. Furthermore, the dataset consists of two subsets namely Part-A and Part-B. The videos in Part-A are fetched from YouTube, while the others in Part-B record simulated physical examinations by junior school students and teachers.

Dataset Format

This dataset consists of two subsets namely Part-A and Part-B. For part-A, we collected 1041 videos from YouTube. The type of actionsincludes workout activities (squatting, pulling-up, frontraising, etc.), athletic events (rowing, pommel horse, etc.) and other repetitive actions (soccer juggling). For Part-B, we record the videos of exercise such as sitting- and pulling-up done by volunteers. It is constructed for the validation of model generalization. In brief, we provide 1451 videos collaborated with 19280 annotations. The videos from our dataset havea n average length of 39.359 seconds.

Data info:

Citation

If you find this useful, please cite our work as follows:

@article{hu2022transrac,

title={TransRAC: Encoding Multi-scale Temporal Correlation with Transformers for Repetitive Action Counting},

author={Hu, Huazhang and Dong, Sixun and Zhao, Yiqun and Lian, Dongze and Li, Zhengxin and Gao, Shenghua},

journal={arXiv preprint arXiv:2204.01018},

year={2022}

}