Impersonator

Liquid Warping GAN: A Unified Framework for Human Motion Imitation, Appearance Transfer and Novel View Synthesis

ICCV 2019

Wen Liu*, Zhixin Piao*, Min Jie, Wenhan Luo, Lin Ma, Shenghua Gao

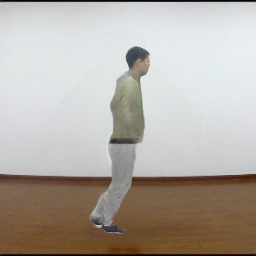

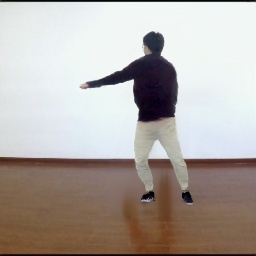

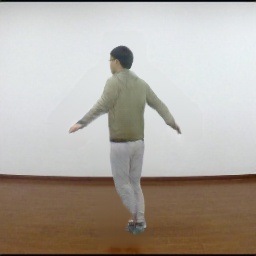

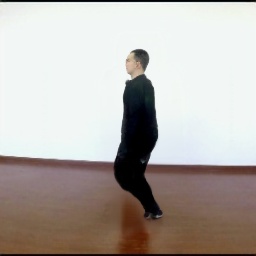

Motion Imitation

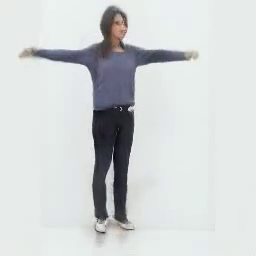

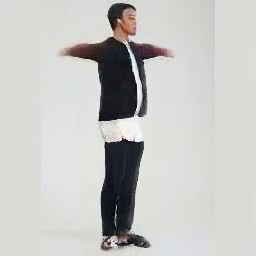

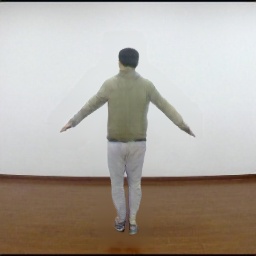

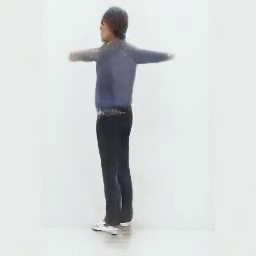

Appearance Transfer

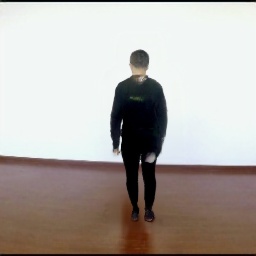

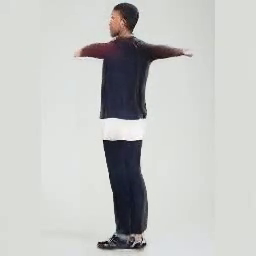

Novel View Synthesis

Abstract

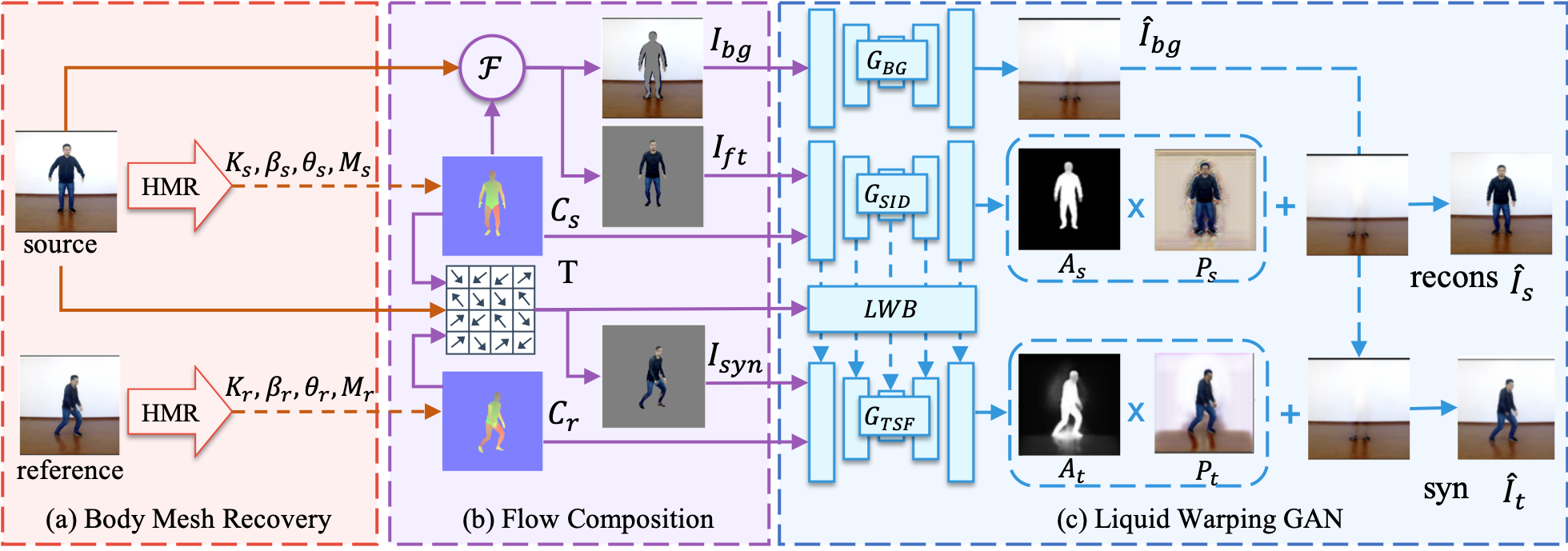

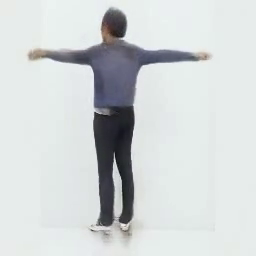

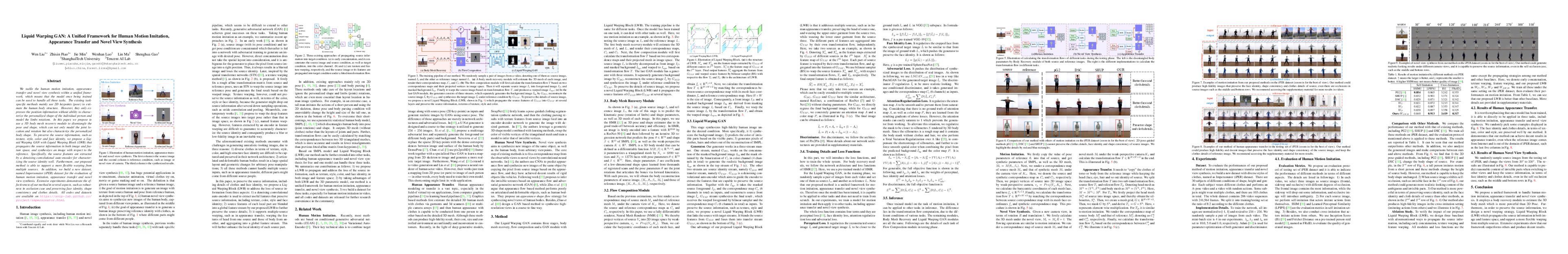

We tackle the human motion imitation, appearance transfer, and novel view synthesis within a unified framework, which means that the model once being trained can be used to handle all these tasks. The existing taskspecific methods mainly use 2D keypoints (pose) to estimate the human body structure. However, they only expresses the position information with no abilities to characterize the personalized shape of the individual person and model the limbs rotations. In this paper, we propose to use a 3D body mesh recovery module to disentangle the pose and shape, which can not only model the joint location and rotation but also characterize the personalized body shape. To preserve the source information, such as texture, style, color, and face identity, we propose a Liquid Warping GAN with Liquid Warping Block (LWB) that propagates the source information in both image and feature spaces, and synthesizes an image with respect to the reference. Specifically, the source features are extracted by a denoising convolutional auto-encoder for characterizing the source identity well. Furthermore, our proposed method is able to support a more flexible warping from multiple sources. In addition, we build a new dataset, namely Impersonator (iPER) dataset, for the evaluation of human motion imitation, appearance transfer, and novel view synthesis. Extensive experiments demonstrate the effectiveness of our method in several aspects, such as robustness in occlusion case and preserving face identity, shape consistency and clothes details.FrameWork Overview

Paper Video

Application (↓ click title to switch application)

Citation

If you find this useful, please cite our work as follows:

@InProceedings{lwb2019,

title={Liquid Warping GAN: A Unified Framework for Human Motion Imitation, Appearance Transfer and Novel View Synthesis},

author={Wen Liu and Zhixin Piao, Min Jie, Wenhan Luo, Lin Ma and Shenghua Gao},

booktitle={The IEEE International Conference on Computer Vision (ICCV)},

year={2019}

}